- A+

所属分类:Linux

一:Robots协议

Robots协议(也称为爬虫协议、机器人协议等)的全称是“网络爬虫排除标准”(Robots Exclusion Protocol),网站通过Robots协议告诉搜索引擎哪些页面可以抓取,哪些页面不能抓取。

Robots不是蜘蛛严格遵守,所以针对流氓蜘蛛需要使用第二种方法。

二:UA禁封

服务器环境为Cenots+nginx,以此为例说明:

在nginx的独立域名配置文件如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

server { listen 80; server_name chaihongjun.me; access_log /alidata1/www/logs/chaihongjun.me_nginx.log combined; index index.html index.htm index.jsp index.php; include none.conf; root /alidata1/www/web/chaihongjun.me; error_page 404 /404.html; location ~ .*\.(php|php5)?$ { #fastcgi_pass remote_php_ip:9000; fastcgi_pass unix:/dev/shm/php-cgi.sock; fastcgi_index index.php; include fastcgi.conf; } location /{ ###### 下面是添加的禁止某些UA访问的具体配置文件 include agent_deny.conf; } #favicon.ico不用打日志 location = /favicon.ico { log_not_found off; access_log off; } ########## the templets files protection ########### location ~*^/templets { rewrite ^/templets/(.*)$ https://chaihongjun.me/ permanent; } location ~ .*\.(gif|jpg|jpeg|png|bmp|swf|flv|ico)$ { expires 30d; access_log off; } location ~ .*\.(js|css)?$ { expires 30d; access_log off; } } ################# 301 #################### server { listen 80; server_name www.chaihongjun.me; return 301 $scheme://chaihongjun.me$request_uri; } |

#禁止Scrapy等工具的抓取agent_deny.conf的具体内容如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

if ($http_user_agent ~* (Scrapy|Curl|HttpClient|Teleport|TeleportPro)) { return 403; } #禁止指定UA及UA为空的访问 if ($http_user_agent ~ "FeedDemon|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|CoolpadWebkit|Java|Feedly|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|oBot|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|HttpClient|heritrix|EasouSpider|Ezooms|WBSearchBot|Elefent|psbot|SiteExplorer|MJ12bot|larbin|TurnitinBot|wsAnalyzer|ichiro|YoudaoBot|^$" ) { return 403; } #禁止非GET|HEAD|POST方式的抓取 if ($request_method !~ ^(GET|HEAD|POST)$) { return 403; } |

include agent_deny.conf;需要注意的是agent_deny.conf需要放在conf目录下,而不能在下一级vhost目录里面,因此在location / {} 内部引用的时候注意路径:

______________________________________ UPDATE 2016.6.3_____________________________________如果agent_deny.conf放在vhost下面,并且相应的更改路径,nginx的配置检测依然会报错。

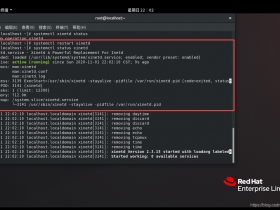

加载了agent_deny.conf,并使用curl检测可以发现确实屏蔽了需要屏蔽的蜘蛛,但是,在百度站长平台ROBOTS检测那里,会发现无法检测到robots.txt文件

其实问题出在最后的

|

1 2 3 4 |

#禁止非GET|HEAD|POST方式的抓取 if ($request_method !~ ^(GET|HEAD|POST)$) { return 403; } |

本站目前使用的屏蔽垃圾蜘蛛和UA代码:取消这段代码即可。

|

1 2 3 4 5 6 7 |

location /{ ...... if ($http_user_agent ~* "Applebot|SEOkicks-Robot|DotBot|YunGuanCe|Exabot|spiderman|Scrapy|HttpClient|Teleport|TeleportPro|SiteExplorer|WBSearchBot|Elefent|psbot|TurnitinBot|wsAnalyzer|ichiro|ezooms|FeedDemon|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|CoolpadWebkit|Java|Feedly|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|oBot|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|HttpClient|MJ12bot|heritrix|EasouSpider|Ezooms|^$"){ return 403; } } |

如果有其他想禁封的,只要先从LOG里面查询UA,然后写入配置再生效。